Yulia Sandamirskaya

ZHAW - Zurich University of Applied Sciences

ICLS - Institute of Computational Life Sciences

Head of Research Center "Cognitive Computing in Life Sciences"

Yulia Sandamirskaya is heading a Research Centre "Cognitive Computing in Life Sciences" at Zurich University of Applied Sciences (ZHAW). Here Research Group develops neural-dynamics based cognitive architectures for real-time, embedded AI systems, spanning sensing, planning, decision making, learning, and control for the next generation of assistive robots.

Archive of Projects in Figures (2005-2020):

I. Neuromorphic Architectures

We develop neuronal network architectures, inspired by biological neuronal circuits and tailored for implementation in neuromorphic hardware. Our architectures aim at control of embodied (e.g., robotic) agents that solve tasks in real-world environments. We use simple robotic vehicles, kindly provided by Jörg Conradt (KTH) and INIvation (Zurich), as well as neuromorphic dynamic vision sensors (DVS). Currently we are working on controllers for flying robots and arms.

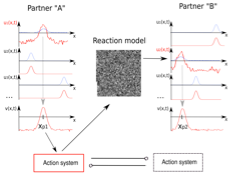

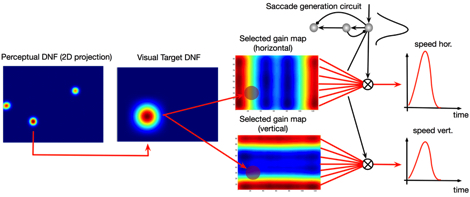

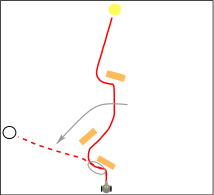

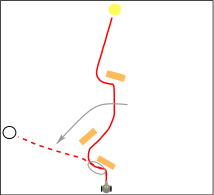

1. Obstacle avoidance and target acquisition

Details in: Blum et al, 2017; Milde et al, 2017a; Milde at al, 2017b

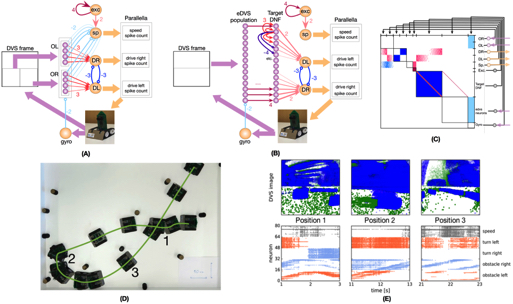

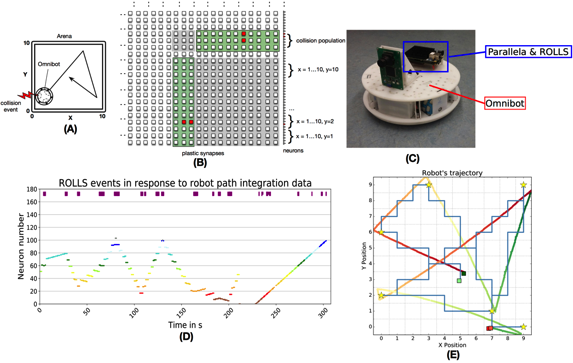

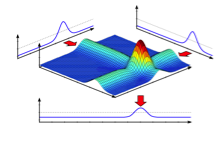

2. Path integration (head-direction system):

Details in: Kreiser et al, 2018

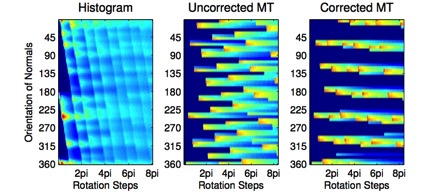

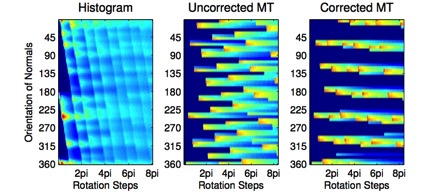

3. Map formation (on neuromorphic chip):

Details in: Kreiser et al, 2018b

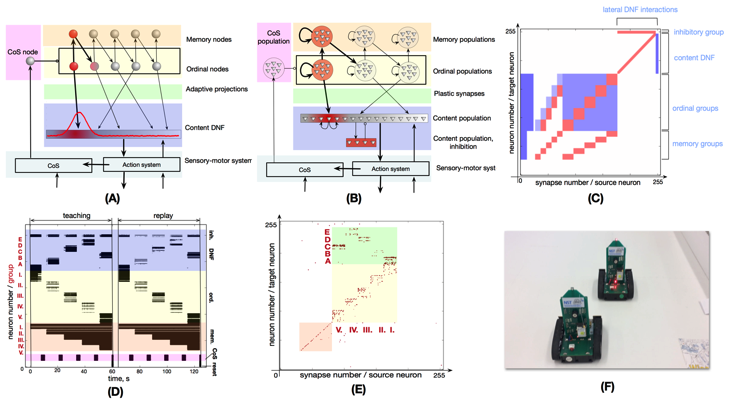

4. Sequence learning on chip:

Detail in: Kreiser et al, 2018c

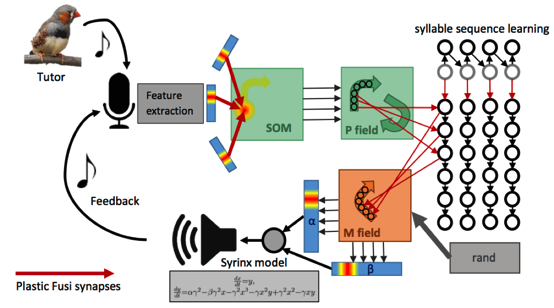

5. Bird song learning model:

Details in: Renner, MSc Thesis

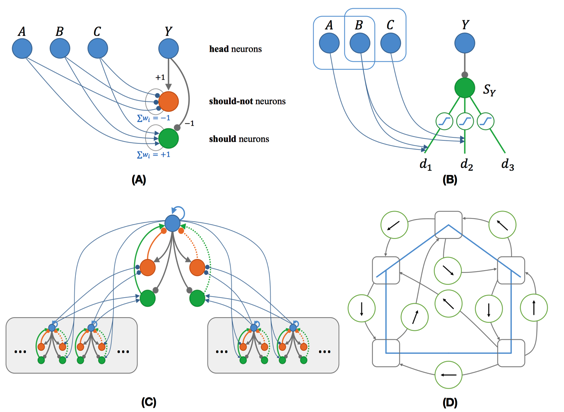

6. Learning a relational map with hypothesis verification:

Details in: Parra Barrero, MSc Thesis

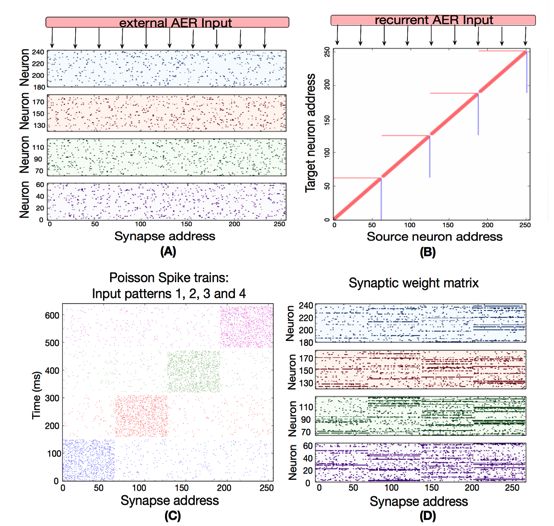

7. Unsupervised and supervised learning on chip:

Details in: Kreiser et al 2017d, Liang et al 2019

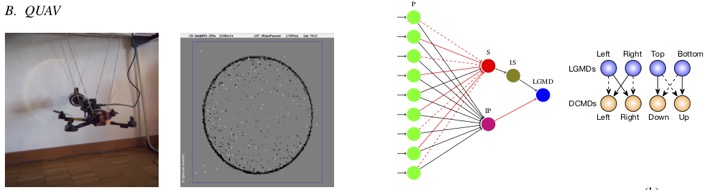

8. Obstacle avoidance using Locust Giant Motion Detector neuron modelDetails in: Salt et al 2017

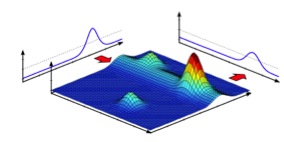

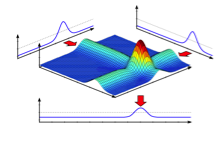

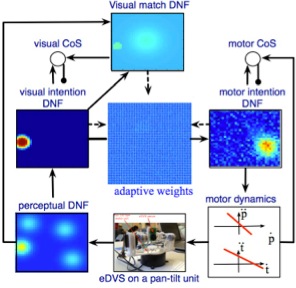

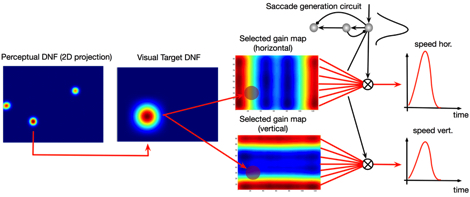

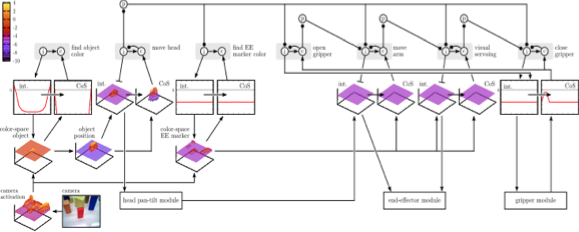

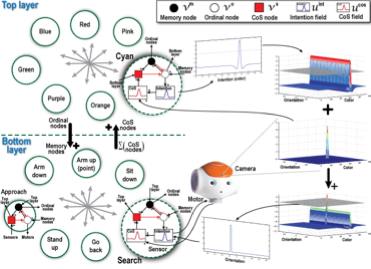

II. Dynamic Neural Fields (DNFs)Before starting to actively using neuromorphic hardware and focusing on neuronal architectures specifically designed for hardware realisation, we developed cognitive neural-dynamic architecture using computational and conceptual framework of Dynamic Neural Fields (dynamicfieldtheory.org). Papers about the architectures, snapshots are shown here can be found on the Publications page.

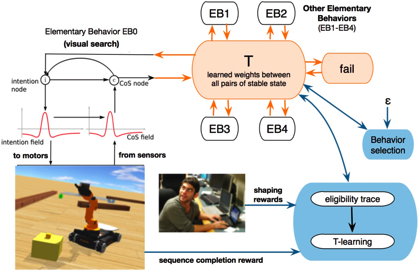

III. Learning in neural-dynamic cognitive systemsWe have studied autonomous learning in neuro-dynamic architecture, i.e. learning that can co-occur with behavior. To enable this type of learning, the neuro-dynamic architecture requires structures that stabilise perceptual and motor representations and signal when and where learning (i.e. update of synaptic weights, resting levels, or memory traces) should happen. More to be found in our Publications, main projects are listed below:

1. Haptic Learning

Details in Strub et al 2017, Strub et al 2014, Strub et al 2014 IROS

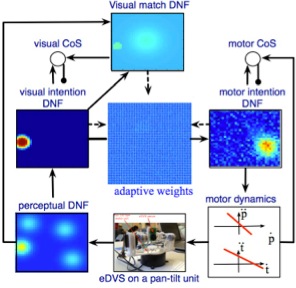

2. Learning and Adaptation in Looking

Details in Bell et al 2014, Sandamirskaya, Storck 2014, Storck, Sandamirskaya 2015, Rudolf et al 2015 Sandamirskaya, Conradt 2013a, Sandamirskaya, Conradt 2013b

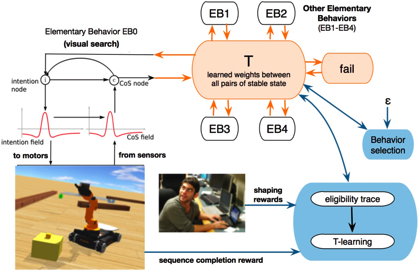

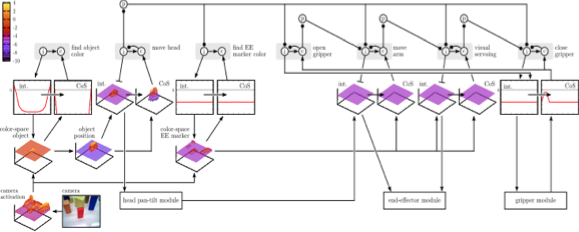

3. Reinforcement Learning in Neural Dynamics

Details in Luciw et al 2014, Luciw et al 2014 SAB, Luciw et al 2013 RSS, Luciw et al 2015 Paladyn, Kazerounian et al 2013 IJCNN

IV. Sequence Generation with Neural DynamicsSequence learning and sequence generation is a fundamental problem in neuronal dynamics that rely on attractor state to represent ``observable'' neuronal states, i.e. those states that result in behavior — movement or percept generation. In the following projects we have studied how sequences of attractor states can be represented — learned, stored and acted out — in neuronal substrate.

1. Behavioral Organization

2. Serial Order in Neural Dynamics

3. Multimodal Action Sequences

4. Hierarchical Serial Order

5. Action Parsing

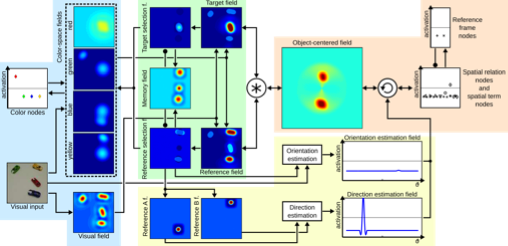

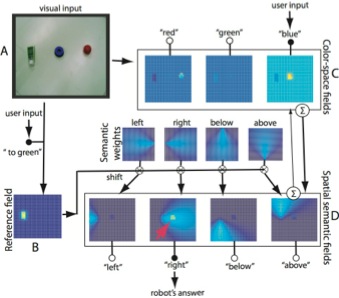

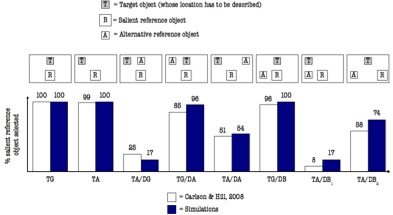

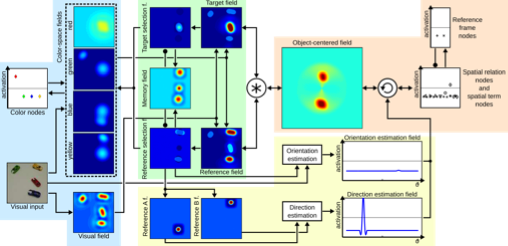

V. Spatial LanguageSpatial language is language that expresses spatial relations between objects: e.g., that one objects is to the left / right / above another object. Terms that describe spatial relations have direct link to the continuous concept of ``space", thus being an easily accessible example, on which grounding of symbols in continuous sensor-motor spaces is studied. We have developed a model for grounding spatial language terms and have implemented it in a close-loop behavioral setting on a robot, observing a scene. The robot can interpret and produce scene description using relational spatial terms, as well as can select a reference object autonomously.

1. A Robotic Implementation

2. Modeling Psychophysics

3. Extension to Complex Spatial Terms

VI. Autonomous A not BIn this work (also to be found on the Publications page), we developed a model for the famous "A-not-B error" task that has been used for decades to study development of visual and motor working and long-term memory in children. We have shown functioning of the model on a robotic agent, demonstrating functional use of improved visual working memory during behavior generation.

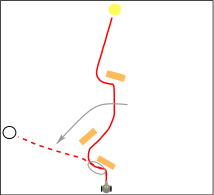

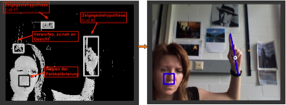

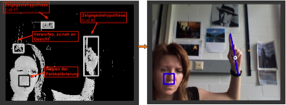

Pointing Gesture RecognitionHere, a simple module for recognition of a pointing gesture and estimation of pointing direction was developed as part of a BMBF-funded project DESIRE (Deutsche Service Robotik Initiative) that developed a robotic assistant in 2005-2009.

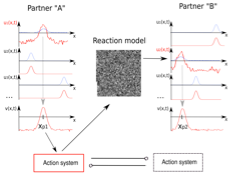

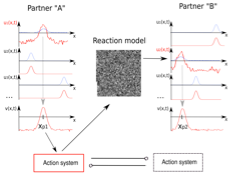

Modeling Turn TakingIn this project, a simple model for turn-taking in a dialogue was developed that coupled two neuro-dynamic architectures for sequence generation, each modelling a partner in a dialogue, where items in a sequence are planned utterances.

III. Learning in neural-dynamic cognitive systemsWe have studied autonomous learning in neuro-dynamic architecture, i.e. learning that can co-occur with behavior. To enable this type of learning, the neuro-dynamic architecture requires structures that stabilise perceptual and motor representations and signal when and where learning (i.e. update of synaptic weights, resting levels, or memory traces) should happen. More to be found in our Publications, main projects are listed below:

1. Haptic Learning

Details in Strub et al 2017, Strub et al 2014, Strub et al 2014 IROS

2. Learning and Adaptation in Looking

Details in Bell et al 2014, Sandamirskaya, Storck 2014, Storck, Sandamirskaya 2015, Rudolf et al 2015 Sandamirskaya, Conradt 2013a, Sandamirskaya, Conradt 2013b

3. Reinforcement Learning in Neural Dynamics

Details in Luciw et al 2014, Luciw et al 2014 SAB, Luciw et al 2013 RSS, Luciw et al 2015 Paladyn, Kazerounian et al 2013 IJCNN

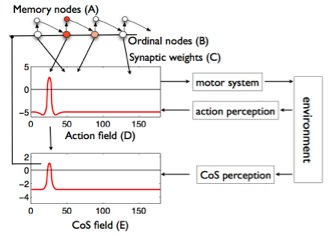

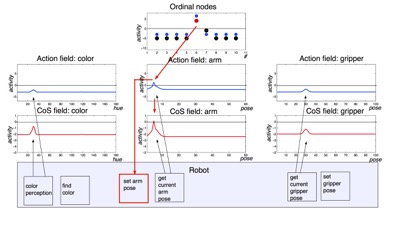

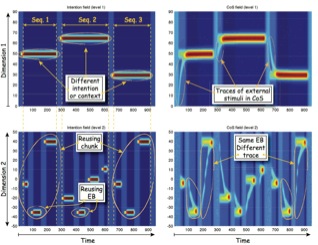

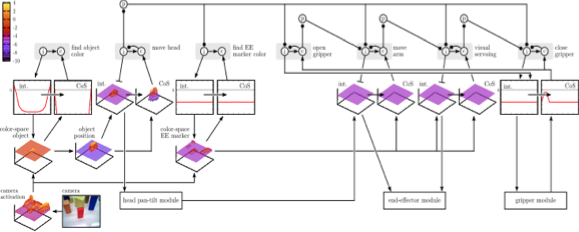

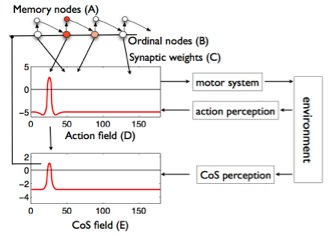

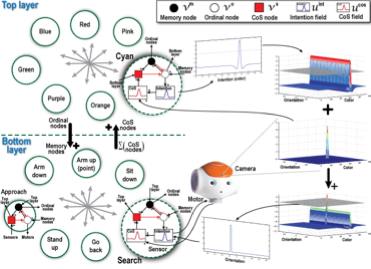

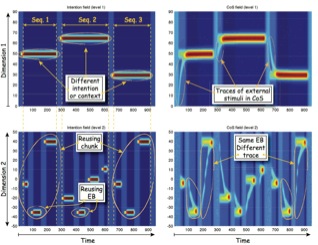

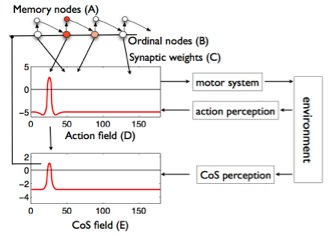

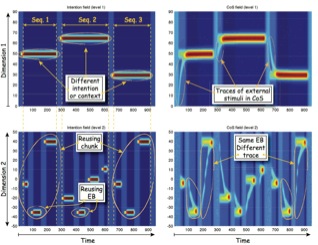

IV. Sequence Generation with Neural DynamicsSequence learning and sequence generation is a fundamental problem in neuronal dynamics that rely on attractor state to represent ``observable'' neuronal states, i.e. those states that result in behavior — movement or percept generation. In the following projects we have studied how sequences of attractor states can be represented — learned, stored and acted out — in neuronal substrate.

1. Behavioral Organization

2. Serial Order in Neural Dynamics

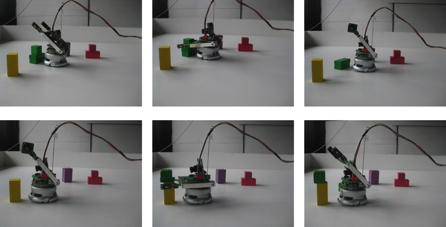

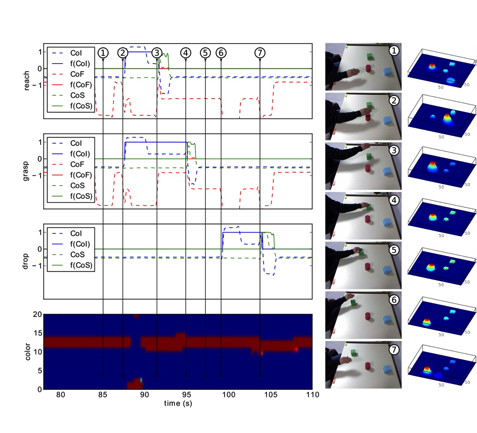

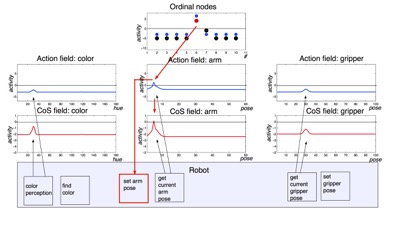

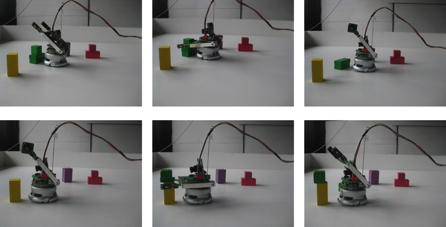

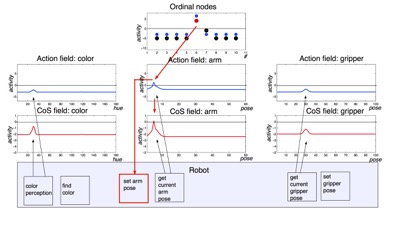

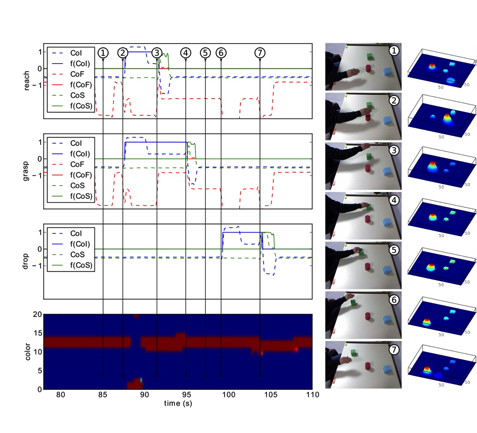

3. Multimodal Action Sequences

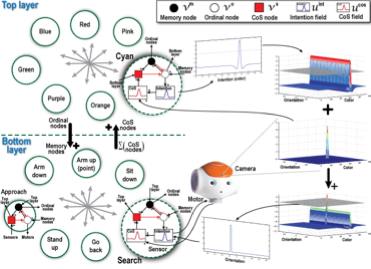

4. Hierarchical Serial Order

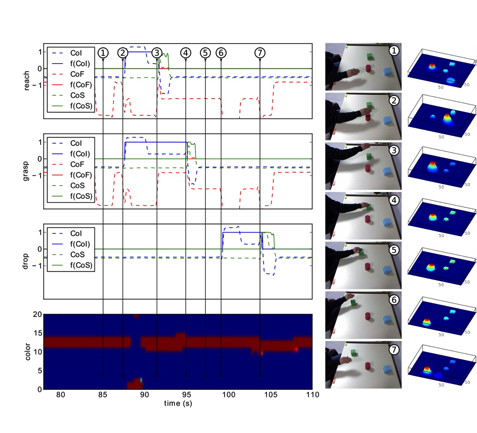

5. Action Parsing

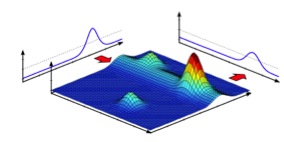

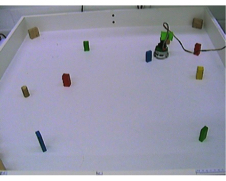

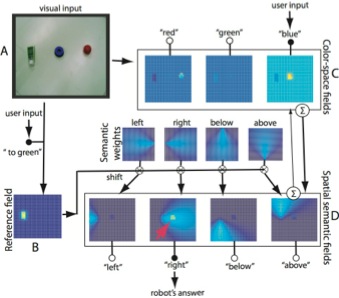

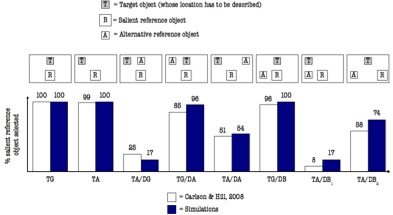

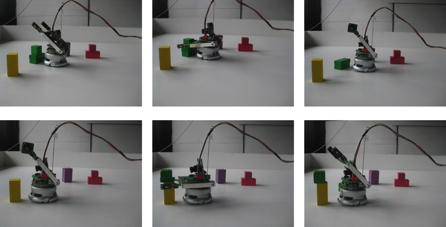

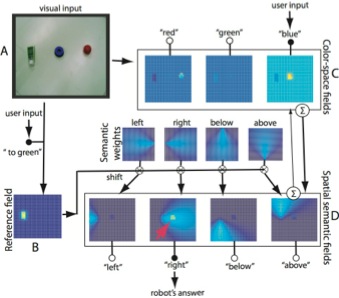

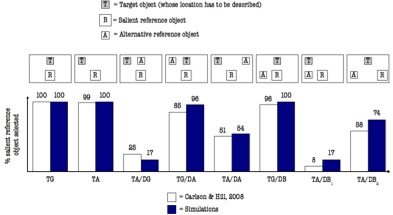

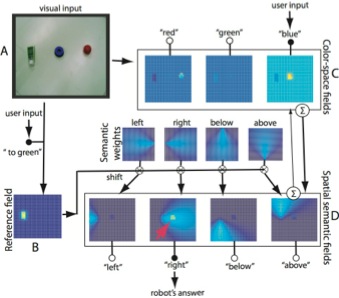

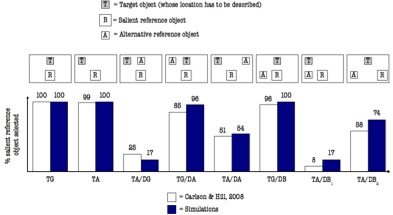

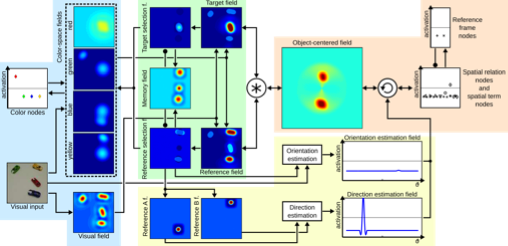

V. Spatial LanguageSpatial language is language that expresses spatial relations between objects: e.g., that one objects is to the left / right / above another object. Terms that describe spatial relations have direct link to the continuous concept of ``space", thus being an easily accessible example, on which grounding of symbols in continuous sensor-motor spaces is studied. We have developed a model for grounding spatial language terms and have implemented it in a close-loop behavioral setting on a robot, observing a scene. The robot can interpret and produce scene description using relational spatial terms, as well as can select a reference object autonomously.

1. A Robotic Implementation

2. Modeling Psychophysics

3. Extension to Complex Spatial Terms

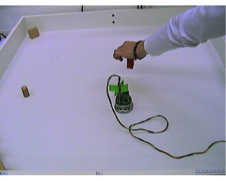

VI. Autonomous A not BIn this work (also to be found on the Publications page), we developed a model for the famous "A-not-B error" task that has been used for decades to study development of visual and motor working and long-term memory in children. We have shown functioning of the model on a robotic agent, demonstrating functional use of improved visual working memory during behavior generation.

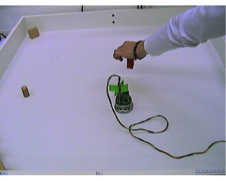

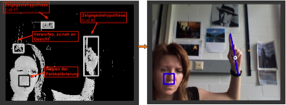

Pointing Gesture RecognitionHere, a simple module for recognition of a pointing gesture and estimation of pointing direction was developed as part of a BMBF-funded project DESIRE (Deutsche Service Robotik Initiative) that developed a robotic assistant in 2005-2009.

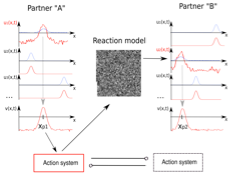

Modeling Turn TakingIn this project, a simple model for turn-taking in a dialogue was developed that coupled two neuro-dynamic architectures for sequence generation, each modelling a partner in a dialogue, where items in a sequence are planned utterances.

1. Behavioral Organization

2. Serial Order in Neural Dynamics

3. Multimodal Action Sequences

4. Hierarchical Serial Order

5. Action Parsing

V. Spatial LanguageSpatial language is language that expresses spatial relations between objects: e.g., that one objects is to the left / right / above another object. Terms that describe spatial relations have direct link to the continuous concept of ``space", thus being an easily accessible example, on which grounding of symbols in continuous sensor-motor spaces is studied. We have developed a model for grounding spatial language terms and have implemented it in a close-loop behavioral setting on a robot, observing a scene. The robot can interpret and produce scene description using relational spatial terms, as well as can select a reference object autonomously.

1. A Robotic Implementation

2. Modeling Psychophysics

3. Extension to Complex Spatial Terms

1. A Robotic Implementation

2. Modeling Psychophysics

3. Extension to Complex Spatial Terms

VI. Autonomous A not BIn this work (also to be found on the Publications page), we developed a model for the famous "A-not-B error" task that has been used for decades to study development of visual and motor working and long-term memory in children. We have shown functioning of the model on a robotic agent, demonstrating functional use of improved visual working memory during behavior generation.

Pointing Gesture RecognitionHere, a simple module for recognition of a pointing gesture and estimation of pointing direction was developed as part of a BMBF-funded project DESIRE (Deutsche Service Robotik Initiative) that developed a robotic assistant in 2005-2009.

Modeling Turn TakingIn this project, a simple model for turn-taking in a dialogue was developed that coupled two neuro-dynamic architectures for sequence generation, each modelling a partner in a dialogue, where items in a sequence are planned utterances.

Modeling Turn TakingIn this project, a simple model for turn-taking in a dialogue was developed that coupled two neuro-dynamic architectures for sequence generation, each modelling a partner in a dialogue, where items in a sequence are planned utterances.